Wave-Particle Duality: So Much for the Atom

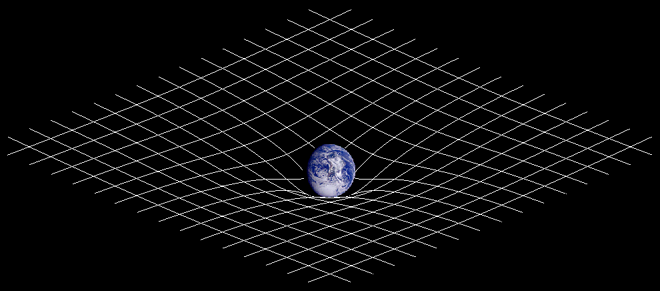

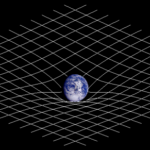

From Charlie’s standpoint, Relativity Theory could be grasped intellectually by the educated, intelligent mind. You didn’t need advanced degrees or a deep understanding of complex mathematics to understand that at a very basic level, Relativity Theory implied that basic measurements like speed, distance and even mass were relative and depended upon the observer’s frame of reference, that mass and energy were basically convertible into each other and equivalent, related by the speed of light that moved at a fixed speed no matter what your frame of reference, and that space and time were not in fact separate and distinct concepts but in order for a more accurate picture of the universe to emerge they needed to be combined into a single notion of spacetime. Relativity says that even gravity’s effect was subject to the same principles that played out at the cosmic scale, i.e. that spacetime “bends” at points of singularity (black holes for example), bends to the extent that light in fact is impacted by the severe gravitational forces at these powerful places in the universe. And indeed that our measurements of time and space were “relative”, relative to the speed and frame of reference from which these measurements were made, the observer was in fact a key element in the process of measurement.

If you assumed all these things, you ended up with a more complete and accurate mathematical and theoretical understanding of the universe than you had with Newtonian mechanics, and one that is powerful enough that despite the best efforts of many great minds over the last 100 years or so, has yet to be supplanted with anything better, at least at the macro scale of the universe. Charlie didn’t doubt that Relativity represented a major step in scientific and even metaphysical step forward to mankind’s understanding of the physical universe, but a subtle and quite distinctive feature of this model was that it reinforced a deterministic and realist model of the universe. In other words, Relativity implicitly assumed that that objects in the physical did in fact exist, i.e. they were “real”, real in the sense that they had an absolute existence in the spacetime continuum somewhere that could be described in terms of qualitative data like speed, mass, velocity, etc. and furthermore that if you knew a set of starting criteria, what scientists like to call a “system state”, as well as a set of variables/forces that acted on said system, you could in turn predict with certainty the outcome of said forces on such a system, i.e. the set of observed descriptive qualities of the objects in said system after the forces have acted upon the objects that existed in the original system state, i.e. the physical world was fully deterministic.

Charlie didn’t want to split hairs on these seemingly inconsequential and subtle assumptions, assumptions that not only underpinned Einstein’s Relativity in fact but also to a great extent underpinned Newtonian mechanics as well, but these were in fact very modern metaphysical assumptions and had not in fact been assumed, at least not in to the degree of certainty of modern times, in theoretical models of reality that existed prior to the Scientific Revolution. Prior to Newton, the world of the spirit, theology in fact, was very much considered to be just as real as the physical world, the world governed by science. This fact was true not only in the West but also in the East, and to a great extent remains true in Eastern philosophical thought today, whereas in the West not so much.

But at their basic, core level, these concepts could be understood, grasped as it were, by the vast majority of the public, even if they had very little if any bearing on their daily lives and didn’t fundamentally change or shift their underlying religious or theological beliefs, or even their moral or ethical principles. Relativity was accepted in the modern age, as were its deterministic and realistic philosophical and metaphysical assumptions, the principles just didn’t really affect the subjective frame of reference, the mental or intellectual frame of reference, within which the majority of humanity perceived the world around them. Relativity itself as a theoretical construct was relegated to the realm of physics, a problem which needed to be understood to pass a physics or science exam in high school or college, to be buried in your consciousness in lieu of our more pressing daily and life pursuits be they family, career and money, or other forms of self-preservation in the modern, Information Age; an era most notably marked by materialism, self-promotion, greed, and capitalism, which interestingly enough all pay homage to realism and determinism to a large extent.

Quantum Theory was altogether different however. Its laws were more subtle and complex than the world described by classical physics, the world described in painstaking mathematical precision by Newton, Einstein and others. And after a lot of studying and research, the only conclusion that Charlie could definitively come to was that in order to understand Quantum Theory, or at least try to come to terms with it, a wholesale different perspective on what reality truly was, or at the very least how reality was to be defined, was required. In other words, in order to understand what Quantum Theory actually means, or in order to grasp the underlying intellectual context within which the behaviors of the underlying particles/fields that Quantum Theory describes were to be understood, a new framework of understanding, a new description of reality, must be adopted. What we considered to be “reality”, or what was “real”, as understood and implied by classical physics which had dominated the minds of the Western world for over 300 years since the publication of Newton’s Principia, needed to be abandoned, or at the very least significantly modified, in order for Quantum Theory to be comprehended in any meaningful way, in order for anyone to make any sense of what Quantum Theory “said” about the nature of the substratum of existence.

Things would never be the same from a physics perspective, this much was clear, whether or not the daily lives of the bulk of those who struggle to survive in the civilized world would evolve along with physicists in concert with these developments remained to be seen.

Quantum Mechanics is the branch of physics that deals with the behavior or particles and matter in the atomic and subatomic realms, or quantum realm so called given the quantized nature of “things” at this scale. So you have some sense of scale, an atom is 10-8 cm across give or take, and the nucleus, or center of an atom, which is made up of what we now call protons and neutrons, is approximately 10-12 cm across. An electron, or a photon for that matter, cannot truly be measured from a size perspective in terms of classical physics for many of the reasons we’ll get into below as we explore the boundaries of the quantum world, but suffice it to say at present our best guess at the estimate of the size of an electron are in the range of 10-18 cm or so.[1]

Whether or not electrons, or photons (particles of light) for that matter, really exist as particles whose physical size, and/or momentum can be actually “measured” is not as straightforward a question as it might appear and gets at some level to the heart of the problem we encounter when we attempt to apply the principles of existence or reality to the subatomic realm, or quantum realm, within the context of the semantic and intellectual framework established in classical physics that has evolved over the last three hundred years or so; namely as defined by independently existing, deterministic and quantifiable measurements of size, location, momentum, mass or velocity.

The word quantum comes from the Latin quantus, meaning “how much” and it is used in this context to identify the behavior of subatomic things that move from and between discrete states rather than a continuum of values or states as is assumed and fundamental to classical physics. The term itself had taken on meanings in several contexts within a broad range of scientific disciplines in the 19th and early 20th centuries, but was formalized and refined as a specific field of study as Quantum Mechanics by Max Planck at the turn of the 20th century and quantization arguably represents the prevailing and distinguishing characteristic of reality at this scale.

Newtonian physics, or even the extension of Newtonian physics as put forth by Einstein with Relativity theory in the beginning of the twentieth century (a theory whose accuracy is well established via experimentation at this point), assumes that particles, things made up of mass, energy and momentum exist independent of the observer or their instruments of observation, and are presumed to exist in continuous form, moving along specific trajectories and whose properties (mass, velocity, etc.) can only be changed by the action of some force upon which these things or objects are affected. This is the essence of Newtonian mechanics upon which the majority of modern day physics, or at least the laws of physics that affect us here at a human scale, is defined and philosophically has at its heart the presumption of realism and determinism.

The only caveat to this view that was put forth by Einstein is that these measurements themselves, of speed or even mass or energy content of a specific object, can only be said to be universally defined according to these physical laws within the specific frame of reference of an observer. Their underlying reality is not questioned – these things clearly exist independent of observation or measurement, clearly (or so it seems) – but the values, or the properties of these things is relative to a frame of reference of the observer change depending upon your frame of reference. This is what Relativity tells us. So the velocity of a massive body, and even the measurement of time itself which is a function of distance and speed, is a function of the relative speed and position of the observer who is performing said measurement.

For the most part, the effects of Relativity can be ignored when we are referring to objects on Earth that are moving at speeds that are minimal with respect to the speed of light and are less massive than say black holes. As we measure things at the cosmic scale, where distances are measured in terms of light years and black holes and other massive phenomena exist which bend spacetime (aka singularities) the effects of Relativity cannot be ignored however.[2]

Leaving aside the field of Cosmology for the moment and getting back to the history of the development of Quantum Mechanics, at the end of the 19th century Planck was commissioned by electric companies to create light bulbs that used less energy, and in this context was trying to understand how the intensity of electromagnetic radiation emitted by a black body (an object that absorbs all electromagnetic radiation regardless of frequency or angle of incidence) depended on the frequency of the radiation, i.e. the color of the light. In his work, and after several iterations of hypotheses that failed to have predictive value, he fell upon the theory that energy is only absorbed or released in quantized form, i.e. in discrete packets of energy he referred to as “bundles” or” energy elements”, the so called Planck postulate. And so the field of Quantum Mechanics was born.[3]

Despite the fact that Einstein is best known for his mathematical models and theories for the description of the forces of gravity and light at a cosmic scale, his work was also instrumental in the advancement of Quantum Mechanics as well. For example, in his work in the effect of radiation on metallic matter and non-metallic solids and liquids, he discovered that electrons are emitted from matter as a consequence of their absorption of energy from electromagnetic radiation of a very short wavelength, such as visible or ultraviolet radiation. Einstein established that in certain experiments light appeared to behave like a stream of tiny particles, not just as a wave, lending more credence and authority to the particle theories describing of quantum realm. He therefore hypothesized the existence of light quanta, or photons, as a result of these experiments, laying the groundwork for subsequent wave-particle duality discoveries and reinforcing the discoveries of Planck with respect to black body radiation and its quantized behavior.[4]

Prior to the establishment of light’s properties as waves, and then in turn the establishment of wave like characteristics of subatomic elements like photons and electrons by Louis de Broglie in the 1920s, it had been fairly well established that these subatomic particles, or electrons or photons as they were later called, behaved like particles. However the debate and study of the nature of light and subatomic matter went all the way back to the 17th century where competing theories of the nature of light were proposed by Isaac Newton, who viewed light as a system of particles, and Christiaan Huygens who postulated that light behaved like a wave. It was not until the work of Einstein, Planck, de Broglie and other physicists of the twentieth century that the nature of these subatomic particles, both light and electrons, were proven to behave both like particles and waves, the result dependent upon the experiment and the context of the system which being observed. This paradoxical principle known as wave-particle duality is one of the cornerstones, and underlying mysteries, of Quantum Theory.

As part of the discoveries of subatomic particle wave-like behavior, what Planck discovered in his study of black body radiation, and Einstein as well within the context of his study of light and photons, was that the measurements or states of a given particle such as a photon or an electron had to take on values that were multiples of very small and discrete quantities, i.e. were non-continuous, the relation of which was represented by a constant value known as the Planck constant[5].

In the quantum realm then, there was not a continuum of values and states of matter as was assumed in physics up until that time, there were bursts of energies and changes of state that were ultimately discrete, and yet at the same time at fixed amplitudes or values, where certain states and certain values could in fact not exist, representing a dramatic departure from the way physicists, and the rest of us mortals, think about movement and change in the “real world”, and most certainly represented a significant departure from Newtonian mechanics upon which Relativity was based where the idea of continuous motion, in fact continuous existence, was never even questioned.

It is interesting to note that Planck and Einstein had a very symbiotic relationship toward the middle and end of their careers, and much of their work complemented and built off of each other. For example Planck is said to have contributed to the establishment and acceptance of Einstein’s revolutionary concept of Relativity within the scientific community after being introduced by Einstein in 1905, the theory of course representing a radical departure from the standard classical physical and mechanics models that had held up for centuries prior. It was through the collaborative work and studies of Planck and Einstein in some sense then that the field of Quantum Mechanics and Quantum Theory is shaped how it is today; Planck who defined the term quanta with respect to the behavior of elements in the realms of matter, electricity, gas and heat, and Einstein who used the term to describe the discrete emissions of light, or photons.

The classic demonstration of light’s behavior as a wave, and perhaps one of the most astonishing and game changing experiments of all time, is illustrated in what is called the double-slit experiment. In the basic version of this experiment, a light source such as a laser beam is shone at a thin plate that that is pierced by two parallel slits. The light in turn passes through each of the slits and displays on a screen behind the plate. The image that is displayed on the screen behind the plate as it turns out is not one of a constant band of light that passes through each one of the slits as you might expect if the light were simply a particle or sets of particles, the light displayed on the screen behind the double-slitted slate is one of light and dark bands, indicating that the light is behaving like a wave and is subject to interference, the strength of the light on the screen cancelling itself out or becoming stronger depending upon how the individual waves interfere with each other. This behavior is exactly akin to what we consider fundamental wavelike behavior, for example like the nature of waves in water where the waves have greater strength if they synchronize correctly (peaks of waves) and cancel each other out (trough of waves) if not.

What is even more interesting however, and was most certainly unexpected, is that once equipment was developed that could reliably send a single particle, an electron or photon for example, through a double-slitted slate, the individual particles did indeed end up at a single location on the screen after passing through just one of the slits as was expected, but however – and here was the kicker – the location on the screen that the particle ended up at, as well as which slit the particle appeared to pass through (in later versions of the experiment which slit “it” passed through could in fact be detected) was not consistent and followed seemingly random and erratic behavior. What researchers found as more and more of these subatomic particles were sent through the slate one at a time, was that the same wave like interference pattern emerged that showed up when the experiment was run with a full beam of light as was done by Young some 100 years prior[6].

So hold on for a second, Charlie had gone over this again and again, and according to all the literature he read on Quantum Theory and Quantum Mechanics all pretty much said the same thing, namely that the heart of the mystery of Quantum Mechanics could be seen in this very simple experiment. And yet it was really hard to, perhaps impossible, to understand what was actually going on, or at least understand without abandoning some of the very foundational principles of classical physics, like for example that these things called subatomic particles actually existed as independent particles or “objects” as we might refer to them at the macroscopic scale, because they seemed to behave like waves when looked at in aggregate but at the same time behaved, sort of, like particles when looked at individually.

What was clear was that this subatomic particle, corpuscle or whatever you wanted to call it, did not have appear to have a linear and fully deterministic trajectory in the classical physics sense, this much was very clear due to the fact that the distribution against the back screen when they were sent through the double slits experiment as individual particles appeared to be random. But what was more odd was that when the experiment was run one corpuscle at a time, again whatever that term really means at the quantum level, not only was the final location on the screen seemingly random individually, but the same aggregate pattern emerged after many, many single corpuscle experiment runs as when a full wave, or set of these corpuscles, was sent through the double slits.

So it appeared, and this was and still remains a very important and telling mysterious characteristic feature of the behavior of these “things” at the subatomic scale, is that not only did the individual photon seemed to be aware of the final wave like pattern of its parent wave, but also that this corpuscle appeared to be interfering with itself when it went through the two slits individually. Charlie wanted to repeat this again for emphasis, because these conclusions, which from his perspective after doing a heck of a lot of research into Quantum Theory for a guy who was inherently lazy but was still looking to try and understand the source of our fundamentally mechanistic and materialistic world view which so dominates Western society today, a view which clearly rested on philosophical and metaphysical foundations which stemmed from classical physical notions of objective reality, with the double-slit experiment you could clearly see that the fundamental substratum of existence not only exhibited wave like as well as particle like behavior, but when looked at at the individual “particle” level, whatever the heck that actually means at the subatomic scale, the individual particle seemed to not only be aware of its parent like wave structure, but the experimental results seemed to imply that the individual particle was interfering with itself.

Furthermore, to make things even more mysterious, as the final location of each of the individual photons in the two slit and other related experiments was evaluated and studied, it was discovered that although the final location of an individual one of these particles could not be determined exactly before the experiment was performed, i.e. there was a fundamental element of uncertainty or randomness involved at the individual corpuscle level, it was discovered that the final locations of these particles measured in toto after many experiments were performed exhibited statistical distribution behavior that could be modeled quite precisely, precisely from a mathematical statistics and probability distribution perspective. That is to say that the sum total distribution of the final locations of all the particles after passing through the slit(s) could be established stochastically, i.e. in terms of well-defined probability distribution consistent with probability theory and well defined mathematics that governed statistical behavior. So in total you could predict what the particle like behavior would look like over a large distribution set of particles in the double slit experiment even if you couldn’t predict with certainty what the outcome would look like for an individual corpuscle.

The mathematics behind this particle distribution that was discovered is what is known as the wave function, typically denoted by the Greek letter psi, ψ or its capital equivalentΨ, predicts what the probability distribution of these “particles” will look like on the screen behind the slate after many individual experiments are run, or in quantum theoretical terms, the wave function predicts the quantum state of a particle throughout a fixed spacetime interval. The wave function was discovered by the Austrian physicist Erwin Schrödinger in 1925, published in 1926, and is commonly referred to in the scientific literature as the Schrödinger equation, analogous in the field of Quantum Mechanics to Newton’s second law of motion in classical physics.

This wave function represents a probability distribution of potential states or outcomes that describe the quantum state of a particle and predicts with a great degree of accuracy the potential location of a particle given a location or state of motion. With the discovery of the wave function, it now became possible to predict the potential locations or states of these subatomic particles, an extremely potent theoretical model that has led to all sorts of inventions and technological advancements since its discovery.

Again, this implied that individual corpuscles were interfering with themselves when passing through the two slits on the slate, which was very odd indeed. In other words, the individual particles were exhibiting wave like characteristics even when they were sent through the double-slitted slate one at a time. This phenomenon was shown to occur with atoms as well as electrons and photons, confirming that all of these subatomic so-called particles exhibited wave like properties as well as particle like qualities, the behavior observed determined upon the type of experiment, or measurement as it were, that the “thing” was subject to.

As Louis De Broglie, the physicist responsible for bridging the theoretical gap between matter, in this case electrons, and waves by establishing the symmetric relation between momentum and wavelength which had at its core Planck’s constant (the De Broglie equation), described this mysterious and somewhat counterintuitive relationship between matter and waves, “A wave must be associated with each corpuscle and only the study of the wave’s propagation will yield information to us on the successive positions of the corpuscle in space.”[7] In the Award Ceremony Speech in 1929 in honor of Louis de Broglie for his work in establishing the relationship between matter and waves for electrons, we find the essence of his ground breaking and still mysterious discovery which remains a core characteristic of Quantum Mechanics to this day.

Louis de Broglie had the boldness to maintain that not all the properties of matter can be explained by the theory that it consists of corpuscles. Apart from the numberless phenomena which can be accounted for by this theory, there are others, according to him, which can be explained only by assuming that matter is, by its nature, a wave motion. At a time when no single known fact supported this theory, Louis de Broglie asserted that a stream of electrons which passed through a very small hole in an opaque screen must exhibit the same phenomena as a light ray under the same conditions. It was not quite in this way that Louis de Broglie’s experimental investigation concerning his theory took place. Instead, the phenomena arising when beams of electrons are reflected by crystalline surfaces, or when they penetrate thin sheets, etc. were turned to account. The experimental results obtained by these various methods have fully substantiated Louis de Broglie’s theory. It is thus a fact that matter has properties which can be interpreted only by assuming that matter is of a wave nature. An aspect of the nature of matter which is completely new and previously quite unsuspected has thus been revealed to us.[8]

So by the 1920s then, you have a fairly well established mathematical theory to govern the behavior of subatomic particles, backed by a large body of empirical and experimental evidence, that indicates quite clearly that what we would call “matter” (or particles or corpuscles) in the classical sense, behaves very differently, or at least has very different fundamental characteristics, in the subatomic realm. It exhibits properties of a particle, or a thing or object, as well as a wave depending upon the type of experiment that is run. So the concept of matter itself then, as we had been accustomed to dealing with and discussing and measuring for some centuries, at least as far back as the time of Newton (1642-1727), had to be reexamined within the context of Quantum Mechanics. For in Newtonian physics, and indeed in the geometric and mathematical framework within which it was developed and conceived which reached far back into antiquity (Euclid circa 300 BCE), matter was presumed to be either a particle or a wave, but most certainly not both.

What even further complicated matters was that matter itself, again as defined by Newtonian mechanics and its extension via Relativity Theory taken together what is commonly referred to as classical physics, was presumed to have some very definite, well-defined and fixed, real properties. Properties like mass, location or position in space, and velocity or trajectory were all presumed to have a real existence independent of whether or not they were measured or observed, even if the actual values were relative to the frame of reference of the observer. All of this hinged upon the notion that the speed of light was fixed no matter what the frame of reference of the observer of course, this was a fixed absolute, nothing could move faster than the speed of light. Well even this seemingly self-evident notion, or postulate one might call it, ran into problems as scientists continued to explore the quantum realm.

So by the 1920s then, the way scientists looked at and viewed matter as we would classically consider it within the context of Newton’s postulates from the early 1700s which were extended further into the notion of spacetime as put forth by Einstein, was encountering some significant difficulties when applied to the behavior of elements in the subatomic, quantum, world. Difficulties that persist to this day it was important to point out. Furthermore, there was extensive empirical and scientific evidence which lent significant credibility to Quantum Theory, which illustrated irrefutably that these subatomic elements behaved not only like waves, exhibiting characteristics such as interference and diffraction, but also like particles in the classic Newtonian sense that had measurable, well defined characteristics that could be quantified within the context of an experiment.

In his Nobel Lecture in 1929, Louis de Broglie, summed up the challenge for physicists of his day, and to a large extent physicists of modern times, given the discoveries of Quantum Mechanics as follows:

The necessity of assuming for light two contradictory theories-that of waves and that of corpuscles – and the inability to understand why, among the infinity of motions which an electron ought to be able to have in the atom according to classical concepts, only certain ones were possible: such were the enigmas confronting physicists at the time…[9]

The other major tenet of Quantum Theory that rests alongside wave-particle duality, and that provides even more complexity when trying to wrap our minds around what is actually going on in the subatomic realm, is what is sometimes referred to as the uncertainty principle, or the Heisenberg uncertainty principle, named after the German theoretical physicist Werner Heisenberg who first put forth the theories and models representing the probability distribution of outcomes of the position of these subatomic particles in certain experiments like the double-slit experiment previously described, even though the wave function itself was the discovery of Schrödinger.

The uncertainty principle states that there is a fundamental theoretical limit on the accuracy with which certain pairs of physical properties of atomic particles, position and momentum being the classical pair for example, that can be known at any given time with certainty. In other words, physical quantities come in conjugate pairs, where only one of the measurements of a given pair can be known precisely at any given time. In other words, when one quantity in a conjugate pair is measured and becomes determined, the complementary conjugate pair becomes indeterminate. In other words, what Heisenberg discovered, and proved mathematically, was that the more precisely one attempts to measure one of these complimentary properties of subatomic particles, the less precisely the other associated complementary attribute of the element can be determined or known.

Published by Heisenberg in 1927, the uncertainty principle states that they are fundamental, conceptual limits of observation in the quantum realm, another radical departure from the realistic and deterministic principles of classical physics which held that all attributes of a thing were measurable at any given time, i.e. this thing or object existed and was real and had measurable and well defined properties irrespective of its state. It’s important to point out here that the uncertainty principle is a statement on the fundamental property of quantum systems as they are mathematically and theoretically modeled and defined, and of course empirically validated by experimental results, not a statement about the technology and method of the observational systems themselves. This wasn’t a theoretical problem, or a problem with the state of instrumentation that was being used for measurement, it was a characteristic of the domain itself.

Max Born, who won the Nobel Prize in Physics in 1954 for his work in Quantum Mechanics, specifically for his statistical interpretations of the wave function, describes this now other seemingly mysterious attribute of the quantum realm as follows (the specific language he uses reveals at some level his interpretation of the quantum theory, more on interpretations later):

…To measure space coordinates and instants of time, rigid measuring rods and clocks are required. On the other hand, to measure momenta and energies, devices are necessary with movable parts to absorb the impact of the test object and to indicate the size of its momentum. Paying regard to the fact that quantum mechanics is competent for dealing with the interaction of object and apparatus, it is seen that no arrangement is possible that will fulfill both requirements simultaneously.[10]

Whereas classical physicists, physics prior to the introduction of Relativity and Quantum Theory, distinguished between the study of particles and waves, the introduction of Quantum Theory and wave-particle duality established that this classic intellectual bifurcation of physics at the macroscopic scale was wholly inadequate in describing and predicting the behavior of these “things” that existed in the subatomic realm, all of which took on the characteristics of both waves and particles depending upon the experiment and context of the system being observed. Furthermore the actual precision within which a state of a “thing” in the subatomic world could be defined was conceptually bound, establishing theoretical limits upon which the state of a given subatomic state could be defined, another divergence from classical physics. And then on top of this, was the requirement of the mathematical principles of statistics and probability theory, as well as significant extensions to the underlying geometry which were required to map the wave function itself in subatomic spacetime, all called quite clearly into question our classical materialistic notions, again based on realism and determinism, upon which scientific advancement had been built for centuries.

[1] Our current ability to measure the size of these subatomic particles goes down to approximately 10-16 cm leveraging currently available instrumentation, so at the very least we can say that our ability to measure anything in the subatomic realm, or most certainly the realm of the general constituents of basic atomic elements such as quarks or gluons for example, is very challenging to say the least. Even the measurement of the estimated size of an atom is not so straightforward as the measurement is dictated by the circumference of the atom, a measurement that relies specifically on the size or radius of the “orbit” of the electrons on said atom, “particles” whose actual “location” cannot be “measured” in tandem with their momentum, standard tenets of Quantum Mechanics, both of which constitute what we consider measurement in the classic Newtonian sense.

[2] In some respects, even at the cosmic scale, there is still significant reason to believe that even Relativity has room for improvement as evidenced by what physicists call Dark Matter and/or Dark Energy, artifacts and principles that have been created by theoretical physicists to describe matter and energy that they believe should exist according to Relativity Theory but the evidence for which their existence is still yet ”undiscovered”. Both Dark Matter and Dark Energy represent active lines of research in modern day Cosmology.

[3] Quantum theory has its roots in this initial hypothesis by Planck, and in this sense he is considered by some to be the father of quantum theory and quantum mechanics. It is for this work in the discovery of “energy quanta” that Max Planck received the Nobel Prize in Physics in 1918, some 15 or so years after publishing.

[4] Einstein termed this behavior the photoelectric effect, and it’s for this work that he won the Nobel Prize in Physics in 1921.

[5] The Planck constant was first described as the proportionality constant between the energy (E) of a photon and the frequency (ν) of its associated electromagnetic wave. This relation between the energy and frequency is called the Planck relation or the Planck–Einstein equation:

[6] The double slit experiment was first devised and used by Thomas Young in the early nineteenth century to display the wave like characteristics of light. It wasn’t until the technology was available to send a single “particle” (a photon or electron for example) that the wave like and stochastically distributed nature of the underlying “particles” was discovered as well. http://en.wikipedia.org/wiki/Young%27s_interference_experiment

[7] Louis de Broglie, “The wave nature of the electron”, Nobel Lecture, Dec 12th, 1929

[8] Presentation Speech by Professor C.W. Oseen, Chairman of the Nobel Committee for Physics of the Royal Swedish Academy of Sciences, on December 10, 1929. Taken from http://www.nobelprize.org/nobel_prizes/physics/laureates/1929/press.html.

[9] Louis de Broglie, “The wave nature of the electron”, Nobel Lecture, Dec 12th, 1929

[10] Max Born, “The statistical interpretation of quantum mechanics” Nobel Lecture, December 11, 1954.

You are able to undoubtedly go to your expertise from the art you’re posting. The earth desires of extra zealous authors such as you who seem to will not be worried to bring up that feel. Continually follow the soul.